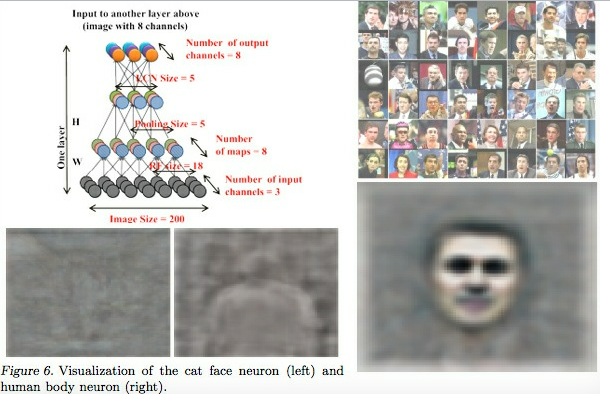

Google X has managed to get a computer to understand what makes a face a face, and what a cat looks like, without any external help.

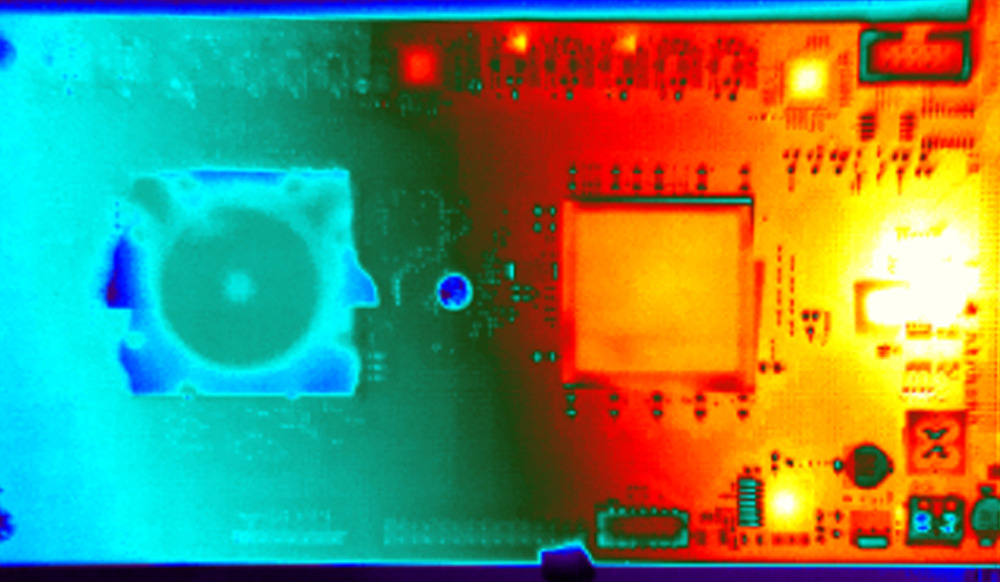

First, they switched up 1,000 computers in a cluster to access a lot of computing power. These virtual neural networks were then fed with 10 million 200×200 pixels large thumbnails from random YouTube videos.

By analyzing all these billions of pixels, the network itself managed to pay attention to that several things looked the same and subsequently built up ghost images of those things, which could be used by the machine to at a later stage being able to recognize them. Simply put, it learned what certain things look like.

If shown a picture of a cat, the “Computer” would present a different picture of a cat to show that it understands what it sees. It has simply taught itself from scratch. Scientists have not in any way told the software to look for something specific.

David A. Bader is the executive director of high-performance computing at the Georgia Tech College of Computing. He comments the breakthrough “The Stanford/Google paper pushes the envelope on the size and scale of neural networks by an order of magnitude over previous efforts,”.

He thinks that the rapid increases in computer technology would close the gap of artificial intelligence within a relatively short period of time. “The scale of modeling the full human visual cortex may be within reach before the end of the decade.”, he adds.

Despite the immense capacity of scale made possible by biological brains, this research at Google provides new insights that existing machine learning algorithms improve greatly as the machines are given access to large pools of data.

As with this network, IBM:s Watson computer is of similar design; with access to immense amounts of data. But able to decipher through the data to make its own deductions.

_______________

http://arxiv.org/abs/1112.6209

http://www-03.ibm.com/innovation/us/watson/index.html

______________________________

![OpenAI. (2025). ChatGPT [Large language model]. https://chatgpt.com](https://www.illustratedcuriosity.com/files/media/55136/b1b0b614-5b72-486c-901d-ff244549d67a-350x260.webp)

![OpenAI. (2025). ChatGPT [Large language model]. https://chatgpt.com](https://www.illustratedcuriosity.com/files/media/55124/79bc18fa-f616-4951-856f-cc724ad5d497-350x260.webp)

![OpenAI. (2025). ChatGPT [Large language model]. https://chatgpt.com](https://www.illustratedcuriosity.com/files/media/55099/2638a982-b4de-4913-8a1c-1479df352bf3-350x260.webp)